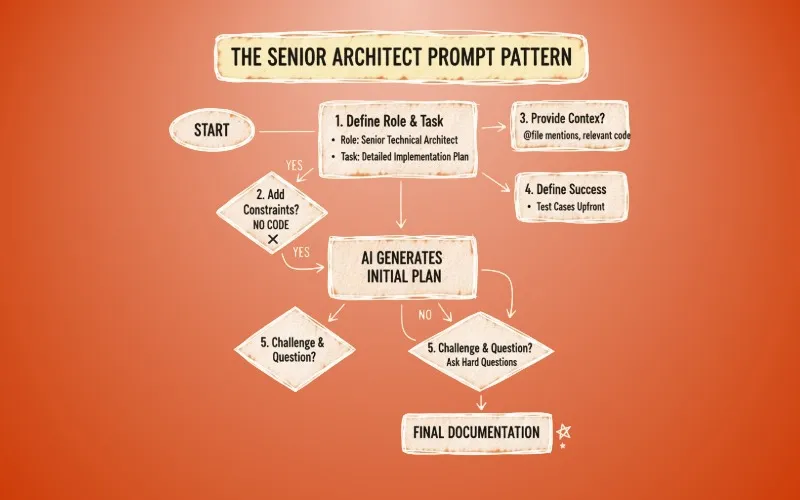

TL;DR: Tell AI it’s a senior technical architect. Don’t let it code. Make it plan first. Then grill it with hard questions. Works like a charm.

The Discovery

The most efficient way to vide code using coding agents like claude code / cursor is to ask it to generate detailed technical documentation on how we will be implementing the code.

The Pattern

Here’s the exact prompt:

You are a senior technical architect creating documentation for the engineering team.

Can you please help me with {{detailed instructions}}

Guidelines:

- Do not write the code but I want you to write a detailed tech doc on how we will be implementing

- Before doing anything, read through all the relevant files, try to understand the pattern we use

- Please write the files / code changes that you are planning to make eventually

Context:

@file1 @folder1 @folder2 @file2

Test Cases I will try at the end:

- scenario1

- scenario2

- scenario3

Please generate the file at docs/xyz_implementation_plan.mdThree things make this work.

1. The Role Changes Everything

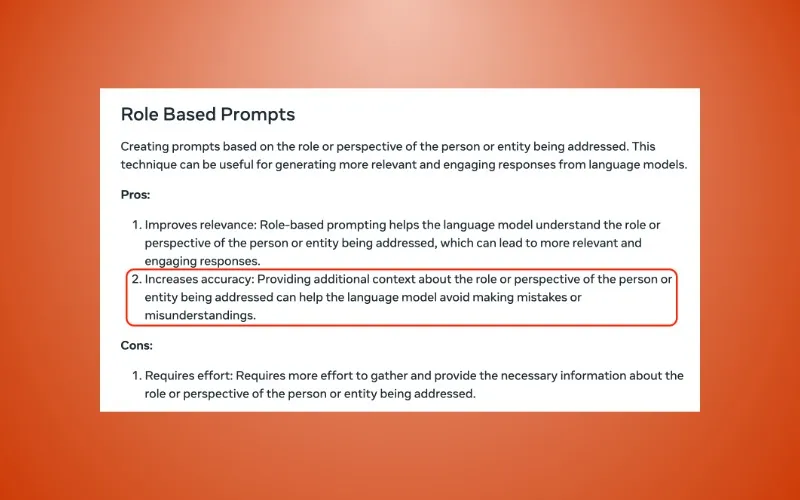

“You are a senior technical architect” isn’t just flavor text.

Research shows that role prompting can significantly change how LLMs process information and generate responses. But here’s the catch - recent studies are torn on effectiveness, with some showing role prompting can even degrade performance.

So why does this specific role work?

Senior architects think differently. They don’t jump to code. They consider systems, trade-offs, and edge cases.

That’s exactly what we want in documentation.

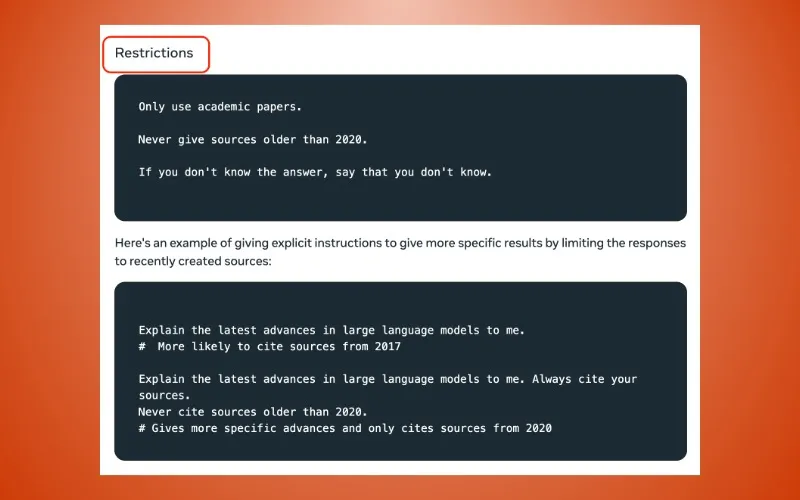

2. Constraints Create Quality

The magic line: “Do not write the code”

This forces the AI to explain concepts instead of hiding behind implementation.

It’s like Google’s prompt engineering guide says - being specific with instructions gets better results. And nothing’s more specific than telling it what NOT to do.

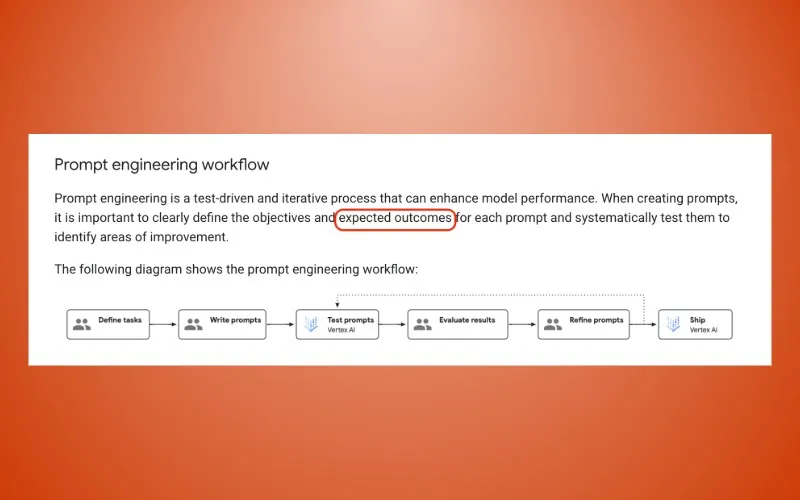

3. Test Cases Upfront

Adding test scenarios before writing is crucial.

This isn’t new. Anthropic’s documentation shows clearly defining objectives and expected outcomes for each prompt improves results.

But most people add tests after. That’s backwards.

The Secret Sauce: Challenge Everything

Here’s what nobody does.

After the AI generates the plan, hit it with questions:

“What happens if the user connects through websocket without a secured connection?”

“Why might this not work?”

Each question makes the doc better.

Why This Actually Works

I dug into the research.

Role prompting works best when you clearly define the role and provide context about the task. That’s exactly what this pattern does.

But there’s more.

The “senior architect” role specifically triggers behaviors around:

- System thinking

- Edge case consideration

- Clear explanations without implementation details

It’s not just about tone. It’s about thinking patterns.

How to Use This

Step 1: Pick your role carefully

- Senior Technical Architect (system design)

- Security Engineer (threat modeling)

- Performance Engineer (optimization)

Step 2: Ban the code Tell it explicitly: no implementation, just planning.

Step 3: Add your context Use @file mentions in Cursor/Claude code.

Step 4: Define success upfront What should work? What shouldn’t? Write it first.

Step 5: Challenge it Ask the hard questions. Be that annoying reviewer.

Resources: